Using Autodistill, you can compare OWLv2 and LLaVA on your own images in a few lines of code.

Here is an example comparison:

To start a comparison, first install the required dependencies:

Next, create a new Python file and add the following code:

Above, replace the images in the `images` directory with the images you want to use.

The images must be absolute paths.

Then, run the script.

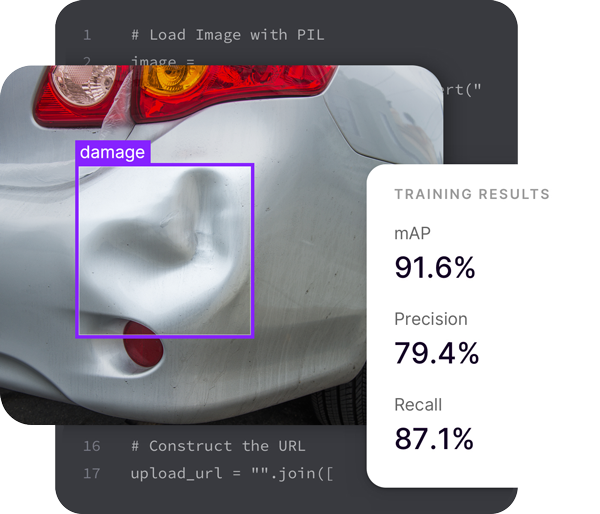

You should see a model comparison like this:

When you have chosen a model that works best for your use case, you can auto label a folder of images using the following code:

.

Both

and

are commonly used in computer vision projects. Below, we compare and contrast

and

Using Autodistill, you can compare OWLv2 and LLaVA on your own images in a few lines of code.

Here is an example comparison:

To start a comparison, first install the required dependencies:

Next, create a new Python file and add the following code:

Above, replace the images in the `images` directory with the images you want to use.

The images must be absolute paths.

Then, run the script.

You should see a model comparison like this:

When you have chosen a model that works best for your use case, you can auto label a folder of images using the following code:

OWLv2 is a transformer-based object detection model developed by Google Research. OWLv2 is the successor to OWL ViT.

How to AugmentHow to LabelHow to Plot PredictionsHow to Filter PredictionsHow to Create a Confusion MatrixLLaVA is an open source multimodal language model that you can use for visual question answering and has limited support for object detection.

How to AugmentHow to LabelHow to Plot PredictionsHow to Filter PredictionsHow to Create a Confusion Matrix

Join 250,000 developers curating high quality datasets and deploying better models with Roboflow.

Get started